I have recently worked on a master data management project for a major wholesaler and I’d like to use this opportunity to share my experience. The customer, operating in 25 countries and undergoing a transformation from physical to omni-channel, was struggling to deliver master data reliably to all the newly built consuming systems, such as web shops and food delivery services, through a myriad of ageing integrations. Before we talk about the solution, let’s take a more detailed look at the business context and associated problems.

Business Context

As with any organisation which has been around for some time, there are systems which have been supporting the core business for years and sometimes even decades. These core systems, the pinnacle of customer’s past and continuing success, can be homegrown or specialised off-the-shelf solutions. They are typically very good at what they were built for, but often are prohibitively expensive to extend and adapt – a virtue of their size or specialisation. In the case of a wholesaler, a few examples are the ERP system, the merchandise system, and the logistics system.

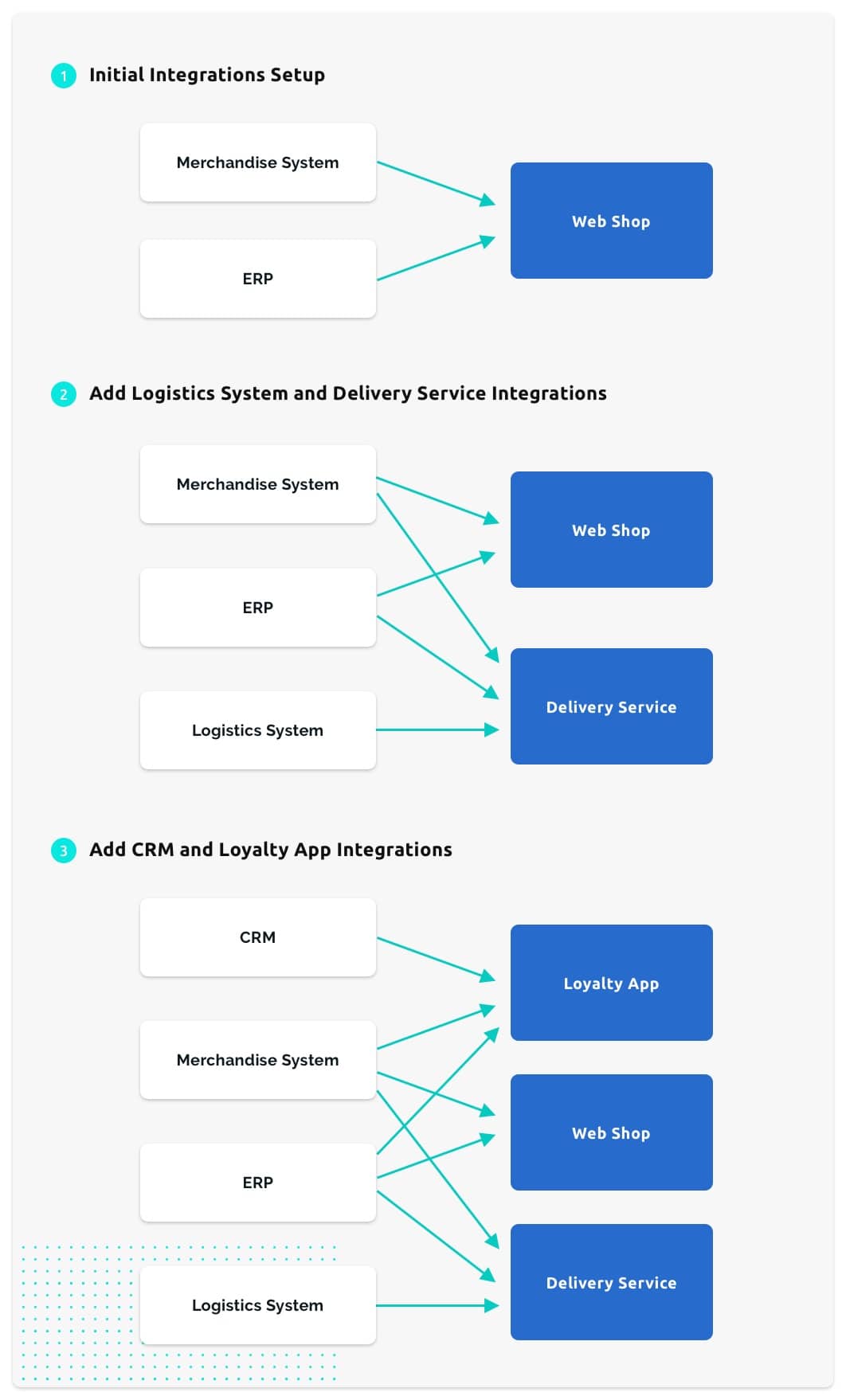

When building a web-shop, as part of an omni-channel strategy, the organisation needs to reach into these core systems and expose the available data to the end user. In a simplified scenario, the merchandise system would provide information about each article, the logistics system would provide the delivery options, and the ERP system would provide stock information – this data could easily be accessed with a few integrations. However the reality is that historically, at least some of the core systems were deployed in a distributed and hierarchical manner across countries and sometimes individual brick-and-mortar stores – this significantly increases the number of required integrations.

Enter a food delivery service and the above story repeats itself – there might be some overlap, there might be some differences, but the same data from the same core source systems is accessed in order to implement and provide a new service. This results in a compounding number of integrations and a tremendous waste of resources:

- developers are busy building integrations instead of building new services;

- each additional integration puts unnecessary strain on the core systems;

- each additional integration must be maintained over time.

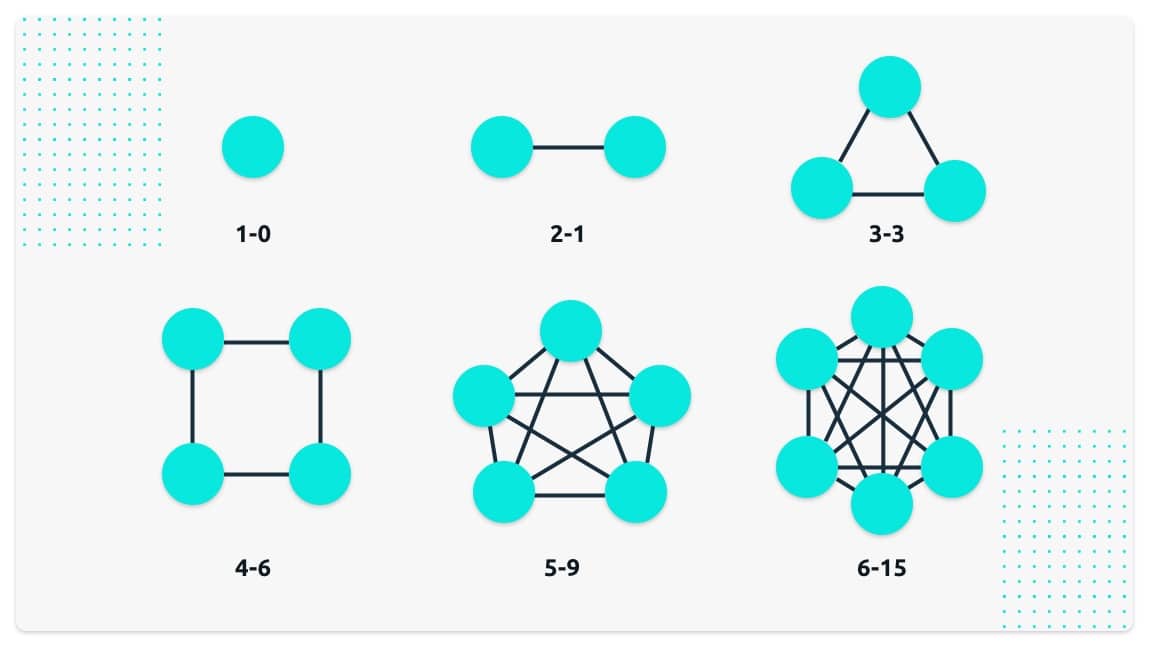

Figure 1 – Growing complexity of systems and interactions

We were approached by the customer to advise and together find a sustainable solution, such that they can focus on building value generating services instead of margin-eating integrations.

Figure 2 – Growing complexity of integrations in a wholesaler system

Requirements Gathering

We considered the requirements of two user groups of the data integration platform – those who provide the data and those who need access to the data.

The data providers are typically the core systems of the organisation. As already mentioned, because these systems are prohibitively expensive to extend and adapt, they desire as few integrations as possible. That is, they would like to expose the data they have once in the most general way instead of building a specialised integration for each consuming system exposing only that which is needed and in the desired format. Furthermore, despite being good at what they do, they don’t typically cope well with the consumers being over-zealous making too many requests to obtain the most up-to-date data. That is, they would like to expose their data when it is convenient for them, such as nightly batch jobs or when the data is saved by a specific workflow, instead of responding to on-demand requests. For these reasons, our data integration platform needed to:

- give the providers the tools to expose their data in bulk in the most general way;

- store the exposed data and respond to on-demand consumer requests.

The data consumers on the other hand are the newly built end-user facing applications. They typically desire a subset of the available data, such as only the relevant articles for the food delivery service. Furthermore, they would like to have access to the most up-to-date data as soon as it is changed. For these reasons, our data integration platform needed to:

- give the consumers the tools to access the specific data they require in the desired granularity;

- notify the consumers of changes to the exact data they are interested in.

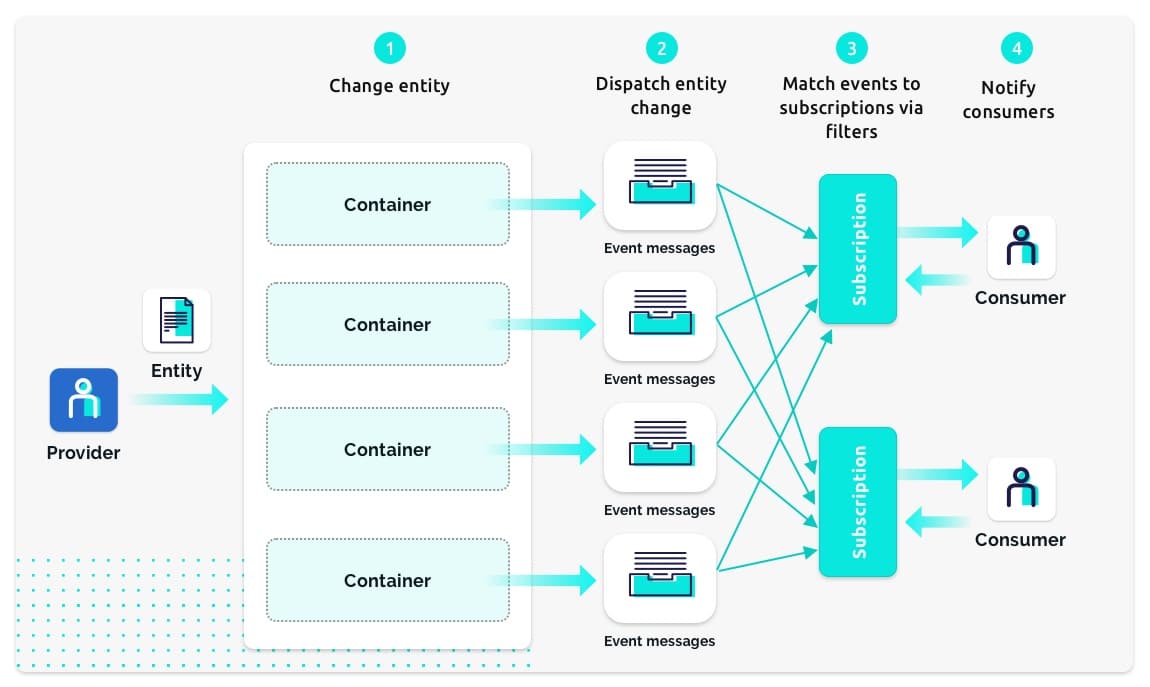

Figure 3 – Integration platform between source systems and consumer services

The Solution

In order to satisfy the above requirements we needed some abstractions for the bits and pieces of the data coming into the data integration platform from providers and out to consumers.

We chose the container concept to serve as an integration point between data providers and consumers. The providers build integrations via which they expose the data from their domain into one or more containers. For example, the merchandise system would expose all its articles into an articles container while the logistics system would expose the stock levels into a stock-levels container. The platform persistently stores the data in the containers. The consumers build integrations via which they consume desired data from one or more containers. For example, a web-shop application would consume data from both the articles and stock-levels containers.

Each container groups together a number of similar entities. The entities can be anything within the provider’s domain, for example, articles or customers. The container doesn’t impose any constraints on the format or size of the entities – they can be XML, JSON, CSV, or any other textual or binary format.

The containers do however require that providers declare a set of key parameters which together with their associated values uniquely identify an entity within the container. An article could, for example, be identified by its GTIN (Global Trade Item Number) and GLN (Global Location Number). The platform indexes the entity keys in order to allow the consumers to efficiently retrieve a subset of entities matching a filter, such as a partial key, on the key parameters.

Although indexing the entity keys does offer some filtering capabilities, it is often desired by consumers to filter based on some entity property available within the content of the entity. For this reason, the platform implements a declarative way of specifying which properties of the content should be indexed in addition to the entity key. This could be useful, as in the food delivery service example, for a consumer to only retrieve articles from a food category specified as a property in the entity content.

Finally, in order for consumer integrations to efficiently target subsets of entities based on filters, the platform also implements a subscription facility. That is, a consumer can specify a few filters for which they would like to be notified in real time every time an entity matching any one of the specified filters changes. This allows consumers to simply monitor their subscriptions and update their data in a reactive way instead of implementing complicated synchronisation mechanisms over the whole set of the data.

Figure 4 shows a simplified Data Integration Platform diagram.

Figure 4 – The Data Integration Platform diagram

Summary

In this post we introduced the business context in which the compounding number of integrations is resulting in a maintenance nightmare and hindering the creation value adding services. We described the requirements of providers and consumers for a data integration platform which aims to solve the aforementioned problem. Finally, we outlined a high-level solution which allows providers to integrate once in a general way and consumers to integrate in the most specific way by subscribing to exactly the changes they are interested in. Stay tuned for the next post in which we will give more detailed insights into the architecture and implementation of the data integration platform.

Marco

Marco, Backend Developer at MobiLab.